STOP! These 7 Technical SEO Issues Need Your Attention NOW.

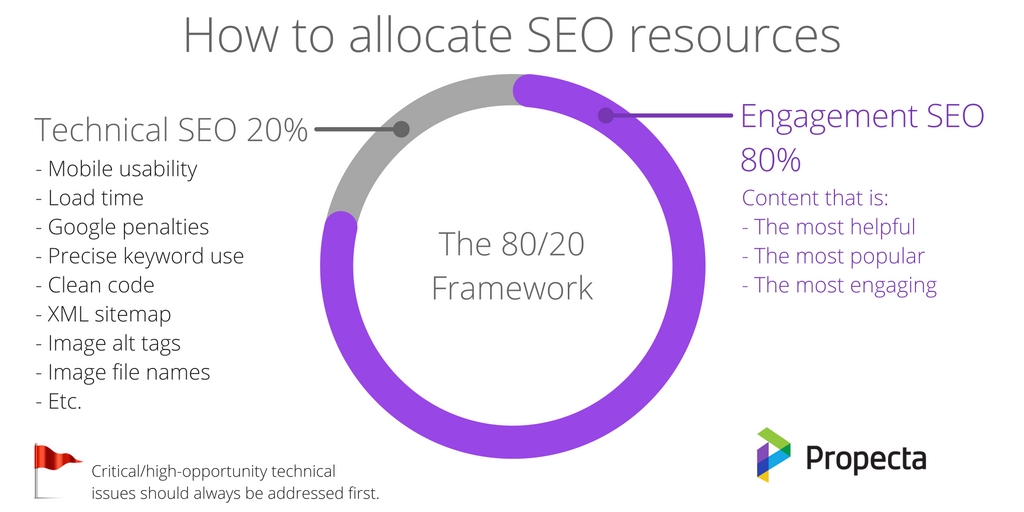

At Profound Strategy, we recommend an 80/20 approach to SEO: spend 80% of your time on engagement SEO tasks and 20% of your time on technical SEO tasks. It’s the engagement SEO work that makes the best use of keywords, answers user intent, creates a good user experience, and—overall—drives rankings, clicks, and revenue. No amount of technical SEO work will drive good traffic to bad content.

But certain technical tasks are still vitally important to an effective SEO strategy. Technical SEO issues can undermine all of your engagement SEO efforts if search engines aren’t able to find, crawl, and render all of the high-quality content you’re publishing.

Instead of neglecting engagement SEO to focus on low-value technical SEO tasks, focus your attention on the most important technical problems on your site.

Researching technical SEO tasks, or running an automated technical audit, can result in a daunting list of to-dos that could take much more than 20% of your team’s time. Instead of neglecting engagement SEO to focus on low-value technical SEO tasks, focus your attention on the most important technical problems on your site—the things that will prevent your SEO from really performing.

1. Critical Pages Aren’t Being Indexed

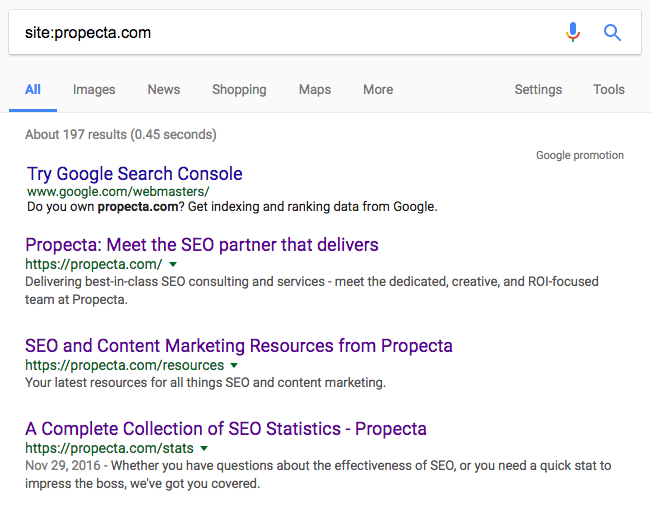

Before a page can appear in search results, it must be crawled and indexed by search engines. If a site is blocking search engines from finding or crawling important pages, it’s impossible to drive organic traffic to those pages. To determine if the most important pages are being indexed, conduct a search using the following query: site:[your domain name].

The search engine will return results for every page of your site that it has indexed. Browse through the list to make sure all critical pages have results. If they do, you’re golden. If they don’t, you may be unintentionally blocking search crawlers.

Crawl issues are commonly caused by:

- Incorrectly formatted robots.txt files

- Server setups that limit crawl rates

- Noindex tags in page meta data

If code or server issues aren’t causing the problem, it could be that the navigational structure of your site is the culprit. If important pages are buried deep within your site, it’s possible that search engine crawlers—and users—simply can’t find your important pages. Take time to review the navigational structure of your site, and move links for important pages to more prominent locations.

2. Slow Load Speeds

Slow-loading sites and pages create a cascade of negative impacts:

- Page speed is a ranking factor for Google, so slow-loading pages can negatively impact your position in the SERPs.

- Users have little patience for slow-loading sites—more than half of mobile site visits are abandoned if a page takes more than three seconds to load, and each second of delay in page load speeds can reduce conversions by 7%.

- Users exiting your site because of slow load times increases your bounce rates, causing low engagement scores that may further reduce search rankings.

Two common culprits for delayed page load speeds include excessive tracking code and oversized image files.

To eliminate delays caused by excessive tracking code, put a system in place for tracking code installations. Google Tag Manager is an excellent tool for installing and deploying tracking codes. It allows for viewing and managing all of of the campaigns being tracked—from both Google and third-party systems—from a single source. Review tracking codes regularly and deactivate any that are not being used.

To eliminate delays caused by oversized image files, format image dimensions and sizes before uploading them. Uploading oversized image files and allowing CSS to reduce images to the proper size and resolution can severely impact page load speeds. Ideally, uploaded images should be sized to fit display containers, but when that’s not possible, there are some general guidelines that can be followed to reduce delays in load speeds:

- Background images should be no larger than 10KB.

- Banners and header images should be no larger than 60KB.

- High-resolution photographs should be no larger than 100KB.

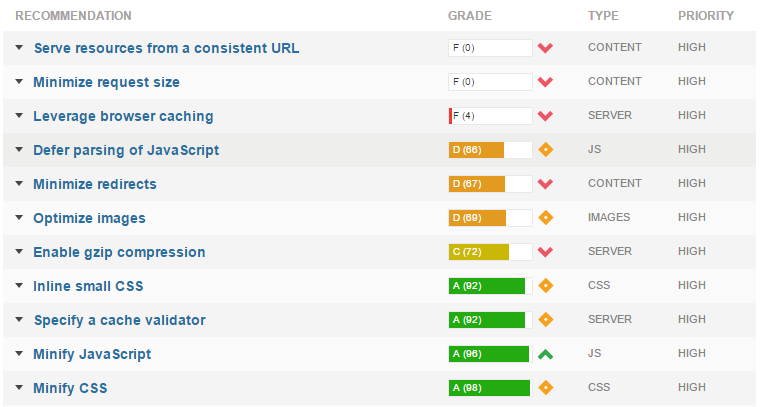

Keep in mind that each site is different. While excessive tracking codes and oversized image files are frequent offenders, there are dozens of other issues that could be impacting page load speeds. To establish a prioritized list of offenders, run your site through GTmetrix. GTmetrix will analyze the speeds at which different page elements load, and will offer a grade and suggestions for improving page speeds.

If suggestions with low or failing grades can be resolved, those should be prioritized highly in your technical SEO to-do list.

3. Missed Opportunities for Rich Snippets

Rich snippets, rich cards, and AMP carousels are becoming increasingly prevalent in search results. In 2016, Google added rich cards to search results for image searches and online courses, and added an AMP-only carousel featuring top news stories. Those were in addition to preexisting rich snippets for reviews, videos, and upcoming events—just to name a few.

To display rich snippets in search results or to appear as results in rich cards, sites must use schema markup or implement microdata. However, marking up all pages of a large site is a labor-intensive initiative that may or may not yield justifiable results. Instead, take time to identify important queries your site is currently ranking for. If rich snippets or cards appear for those queries, consider implementing microdata or schema markup on those pages.

4. Google Penalties

Google penalties can be devastating to a site’s SEO. If Google has taken a manual action on your site, your rankings could be penalized, or you could be removed from search results altogether. There are several reasons why Google might penalize your site. Most are related to actions taken to intentionally deceive search engines, but there are a few that could be unintentional as well:

- Your site has been hacked and you aren’t yet aware.

- Google has received a high number of copyright removal notices for your site. This is usually the result of writers, users, or other contributors uploading images, videos, or content taken from other websites or publications.

- You have an unnatural backlink profile, typically the result of paying a black-hat SEO company to help increase rankings.

- Your site has an excess amount of user-generated spam. User generated spam commonly appears in comment threads or forum posts. Because spammers generally target outdated posts, this spam could easily go unnoticed if you don’t have a strict monitoring process.

- Your site content provides little value. It’s possible that you’ve focused more effort on monetizing your site than on making it useful for users. If the content on your site is shallow, search engines may interpret your site as spam.

To find out if your site has been penalized, check for manual actions listed in Google Search Console:

- Expand “Search Traffic.”

- Click “Manual Actions.”

If any manual actions are listed for your site, follow Google’s instructions for resolving the issue. You may need to get rid of content created by hackers, disavow unnatural backlinks, delete or revise shallow content pages, or remove spam comments left by site spammers.

5. Improper Redirect Setup

Improperly configured redirects can cause severe issues, including loss of pagerank and loss of traffic. Common redirect problems include:

- Using 302 redirects instead of 301 redirects—302s are designed for temporary site redirects, and 301s are designed for permanent redirects. If you have 302 redirects set up for pages that will never return, take time to convert those to 301 redirects.

- Neglecting to redirect replaced pages—If you have a high number of 404 errors listed in Google Search Console and aren’t sure why, it’s probably the result of a recent site redesign or change that resulted in altered URLs for your site pages. Review the 404 errors, and set up 301 redirects for any pages that have identical counterparts on new URLs.

- Redirect loops—When making major site changes that require long redirect strategies, it’s not uncommon for errors in redirect files to result in redirect loops. If search engines aren’t indexing pages or are reporting 301 errors, it’s time to audit the redirect file.

- You’ve redirected old pages to new pages with irrelevant content—Sometimes, pages are just gone. While a long list of 404 errors in Google Search Console can make it seem like there’s a problem with your site, it’s not an issue if the pages listed were intentionally removed. Don’t redirect all pages to your homepage to resolve the errors—search engines could interpret this as malicious behavior.

If you’re seeing a loss in traffic and ranking after a site redesign or noticing lots of new crawl errors in Google Search Console, take time to audit your redirects. By transitioning 302 redirects to 301s, resolving redirect loops, and ensuring deleted pages are pointing to related URLs, you may be able to recover lost traffic and rankings.

6. Dirty Sitemaps

All entries in your sitemap should point to live pages on your site, not 404 errors, 301 or 302 redirects, or server errors (5XX). While Google says these errors in a sitemap aren’t a problem, Bing has little tolerance for sitemap errors. According to Bing’s Duane Forrester,

“Your Sitemaps need to be clean. We have a 1% allowance for dirt in a Sitemap. If we see more than a 1% level of dirt, we begin losing trust in the Sitemap.”

Perform occasional audits of your sitemap to ensure all pages are listed, and all listed pages are live on your site. Delete entries for any pages that have been removed, and update any entries that have been redirected with the new page URLs.

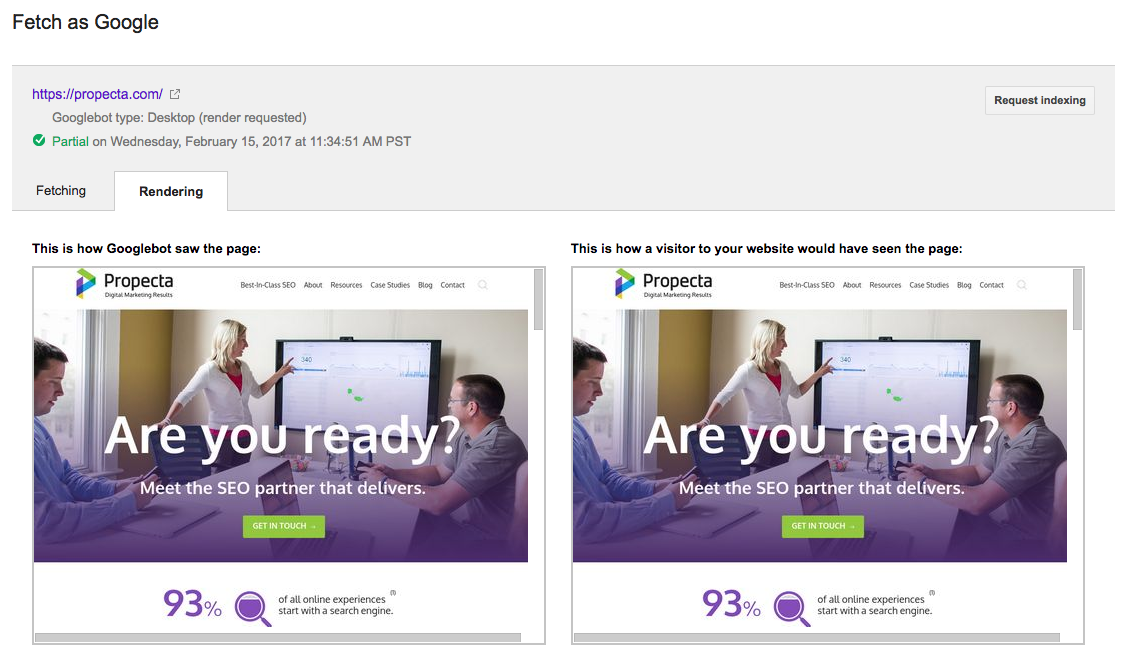

7. Search Engines Aren’t Properly Rendering Your Site

Search engines can have trouble rendering javascript-heavy sites. When this happens, rankings can suffer if search engines can’t access important page content. If your site relies heavily on javascript, you need to make sure that search engines can render all the page content. You can do this in Google Search Console:

- Expand “Crawl.”

- Select “Fetch as Google.”

- Use the “Fetch and Render” function to see how your pages appear to search engine crawlers.

Review the renderings of your pages to see if all important components are displayed.

If the search engine doesn’t render your display network ads, it’s not an issue. If it doesn’t display important components, tools, or content, it’s not using those elements to determine your ranking. Discuss these issues with your development team to determine if there are more SEO-friendly ways to code important site components.

Focus on the Most Important Technical SEO Tasks

If you’ve invested in a machine-run technical SEO audit, you may have a long list of suggestions for issues that need to be resolved. Approach these tasks with caution: not all will result in measurable improvements to your traffic or rankings. Some will require a lot of effort but will produce little value. Save those for a rainy day, and spend your time focusing on important issues.

By focusing on the most important and impactful technical SEO tasks, you’ll support the efforts you’re putting into engagement SEO. With clean code that search engines are able to properly index, crawl, and render, technical and engagement SEO tasks work hand-in-hand to increase incoming organic traffic.

What's Next?

Profound Strategy is on a mission to help growth-minded marketers turn SEO back into a source of predictable, reliable, scalable business results.

Start winning in organic search and turn SEO into your most efficient marketing channel. Subscribe to updates and join the 6,000+ marketing executives and founders that are changing the way they do SEO:

And dig deeper with some of our best content, such as The CMO’s Guide to Modern SEO, Technical SEO: A Decision Maker’s Guide, and A Modern Framework for SEO Work that Matters.